Risk Management & Position Sizing: An Engineer’s Guide to Trading

Risk Management & Position Sizing: An Engineer’s Guide to Trading

Trading can seem like a thrilling opportunity to achieve financial freedom, but the reality for most retail traders is starkly different. Statistics show that the vast majority of retail traders fail, not because they lack the ability to pick profitable trades, but due to inadequate risk management. Without a structured approach to managing losses and protecting capital, even a streak of good trades can easily be undone by one bad decision. The key to success in trading lies not in predicting the market perfectly but in managing risk effectively.

As engineers, we are trained to solve complex problems using quantitative methods, rigorous analysis, and logical thinking. These skills are highly transferable to trading risk management and position sizing. By approaching trading as a system that can be optimized and controlled, engineers can develop strategies to minimize losses and maximize returns. This guide is designed to bridge the gap between engineering principles and the world of trading, equipping you with the tools and frameworks to succeed in one of the most challenging arenas in finance.

Table of Contents

- Kelly Criterion

- Position Sizing Methods

- Maximum Drawdown

- Value at Risk

- Stop-Loss Strategies

- Portfolio Risk

- Risk-Adjusted Returns

- Risk Management Checklist

- FAQ

### The Kelly Criterion

The Kelly Criterion is a popular mathematical formula used in trading and gambling to determine the optimal bet size for maximizing long-term growth. It balances the trade-off between risk and reward, ensuring that traders do not allocate too much or too little capital to a single trade. The formula is as follows:

\[

f^* = \frac{bp – q}{b}

\]

Where:

– \( f^* \): The fraction of your capital to allocate to the trade.

– \( b \): The odds received on the trade (net return per dollar wagered).

– \( p \): The probability of winning the trade.

– \( q \): The probability of losing the trade (\( q = 1 – p \)).

—

#### Worked Example

Suppose you’re considering a trade where the probability of success (\( p \)) is 60% (or 0.6), and the odds (\( b \)) are 2:1. That means for every $1 invested, you receive $2 in profit if you win. The probability of losing (\( q \)) is therefore 40% (or 0.4). Using the Kelly Criterion formula:

\[

f^* = \frac{(2 \times 0.6) – 0.4}{2}

\]

\[

f^* = \frac{1.2 – 0.4}{2}

\]

\[

f^* = \frac{0.8}{2} = 0.4

\]

According to the Kelly Criterion, you should allocate 40% of your capital to this trade.

—

#### Full Kelly vs Half Kelly vs Quarter Kelly

The Full Kelly strategy uses the exact fraction (\( f^* \)) calculated by the formula. However, this can lead to high volatility due to the aggressive nature of the strategy. To mitigate risk, many traders use a fractional Kelly approach:

– **Half Kelly**: Use 50% of the \( f^* \) value.

– **Quarter Kelly**: Use 25% of the \( f^* \) value.

For example, if \( f^* = 0.4 \), the Half Kelly fraction would be \( 0.2 \) (20% of capital), and the Quarter Kelly fraction would be \( 0.1 \) (10% of capital). These fractional approaches reduce portfolio volatility and better handle estimation errors.

—

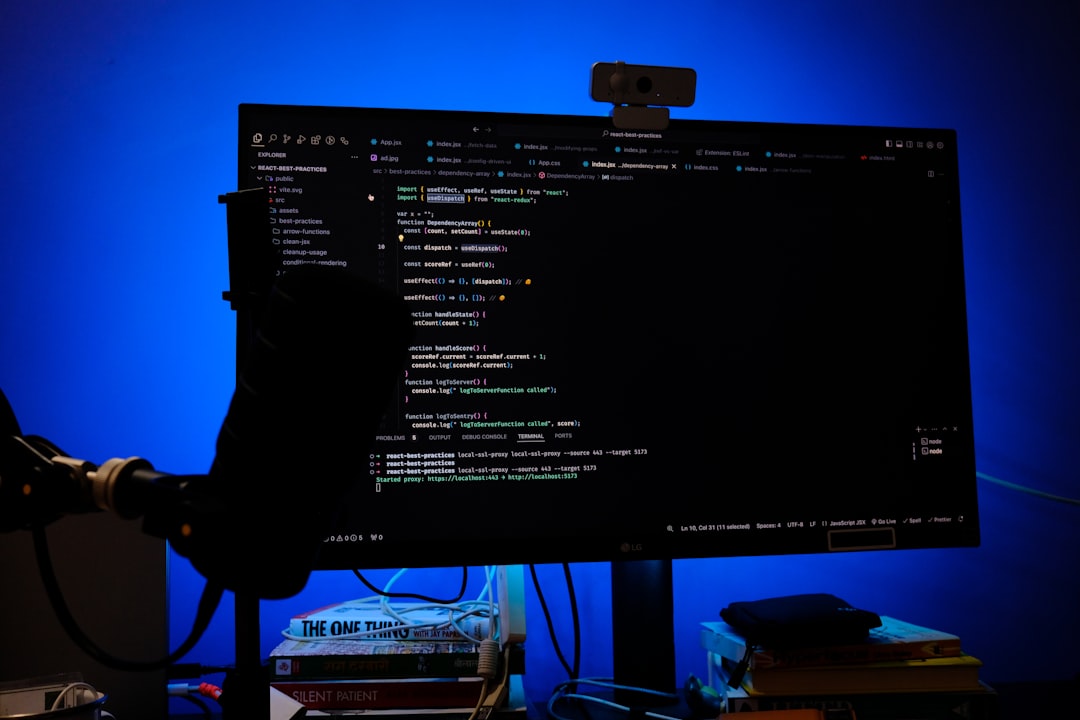

#### JavaScript Implementation of Kelly Calculator

You can implement a simple Kelly Criterion calculator using JavaScript. Here’s an example:

// Kelly Criterion Calculator

function calculateKelly(b, p) {

const q = 1 - p; // Probability of losing

const f = (b * p - q) / b; // Kelly formula

return f;

}

// Example usage

const b = 2; // Odds (2:1)

const p = 0.6; // Probability of winning (60%)

const fullKelly = calculateKelly(b, p);

const halfKelly = fullKelly / 2;

const quarterKelly = fullKelly / 4;

console.log('Full Kelly Fraction:', fullKelly);

console.log('Half Kelly Fraction:', halfKelly);

console.log('Quarter Kelly Fraction:', quarterKelly);

—

#### When Kelly Over-Bets

The Kelly Criterion assumes precise knowledge of probabilities and odds, which is rarely available in real-world trading. Overestimating \( p \) or underestimating \( q \) can lead to over-betting, exposing you to significant risks. Additionally, in markets with “fat tails” (where extreme events occur more frequently than expected), the Kelly Criterion can result in overly aggressive allocations, potentially causing large drawdowns.

To mitigate these risks:

1. Use conservative estimates for probabilities.

2. Consider using fractional Kelly (e.g., Half or Quarter Kelly).

3. Account for the possibility of fat tails and model robustness in your risk management strategy.

While the Kelly Criterion is a powerful tool for optimizing growth, it requires prudent application to avoid catastrophic losses.

### Position Sizing Methods

Position sizing is a vital aspect of trading risk management, determining the number of units or contracts to trade per position. A well-chosen position sizing technique ensures that traders manage their capital wisely, sustain through drawdowns, and maximize profitability. Below are some popular position sizing methods with examples and a detailed comparison.

—

#### 1. Fixed Dollar Method

In this method, you risk a fixed dollar amount on every trade, regardless of your account size. For instance, if you decide to risk $100 per trade, your position size will depend on the distance of your stop loss.

##### Example:

“`javascript

const fixedDollarSize = (riskPerTrade, stopLoss) => {

return riskPerTrade / stopLoss; // Position size = risk / stop-loss

};

console.log(fixedDollarSize(100, 2)); // Risk $100 with $2 stop-loss

“`

*Pros:* Simple to implement and consistent.

*Cons:* Does not scale with account size or volatility.

—

#### 2. Fixed Percentage Method (Recommended)

This method involves risking a fixed percentage (e.g., 1% or 2%) of your total portfolio per trade. It’s one of the most widely recommended methods for its adaptability and scalability.

##### JavaScript Example:

“`javascript

function fixedPercentageSize(accountBalance, riskPercentage, stopLoss) {

const riskAmount = accountBalance * (riskPercentage / 100);

return riskAmount / stopLoss; // Position size = risk / stop-loss

}

// Example usage

console.log(fixedPercentageSize(10000, 2, 2)); // 2% risk of $10,000 account with $2 stop-loss

“`

*Pros:* Scales with account growth and prevents large losses.

*Cons:* Requires frequent recalculation as the account size changes.

—

#### 3. Volatility-Based (ATR Method)

This approach uses the Average True Range (ATR) indicator to measure market volatility. Position size is calculated as the risk amount divided by ATR value.

##### Example:

“`javascript

const atrPositionSize = (riskPerTrade, atrValue) => {

return riskPerTrade / atrValue; // Position size = risk / ATR

};

console.log(atrPositionSize(100, 1.5)); // Risk $100 with ATR of 1.5

“`

*Pros:* Adapts to market volatility, ensuring proportional risk.

*Cons:* Requires ATR calculation and may be complex for beginners.

—

#### 4. Fixed Ratio (Ryan Jones Method)

This method is based on trading units and scaling up or down after certain profit milestones. For example, a trader might increase position size after every $500 profit.

##### Example:

“`javascript

const fixedRatioSize = (initialUnits, accountBalance, delta) => {

return Math.floor(accountBalance / delta) + initialUnits;

};

console.log(fixedRatioSize(1, 10500, 500)); // Start with 1 unit and increase per $500 delta

“`

*Pros:* Encourages discipline and controlled scaling.

*Cons:* Requires careful calibration of delta and tracking milestones.

—

### Comparison Table

| **Method** | **Pros** | **Cons** |

|————————-|——————————————|—————————————–|

| Fixed Dollar | Simple and consistent. | Does not adapt to account growth. |

| Fixed Percentage | Scales with account size; highly recommended. | Requires recalculations. |

| Volatility-Based (ATR) | Reflects market conditions. | Complex for beginners; needs ATR data. |

| Fixed Ratio | Encourages scaling with profits. | Requires predefined milestones. |

—

**Conclusion:**

Among these methods, the Fixed Percentage method is the most recommended for its simplicity and scalability. It ensures that traders risk an appropriate amount per trade, adapting to both losses and growth in the account balance. Using volatility-based methods (like ATR) adds another layer of precision but may be more suitable for experienced traders. Always choose a method that aligns with your trading goals and risk tolerance.

Trading Article

Maximum Drawdown Analysis

Maximum Drawdown (MDD) is a critical metric in trading that measures the largest peak-to-trough decline in an equity curve over a specific time period. It highlights the worst-case scenario for a portfolio, helping traders and investors gauge the risk of significant losses.

The formula for calculating Maximum Drawdown is:

MDD = (Peak Value - Trough Value) / Peak Value

Why does Maximum Drawdown matter more than returns? While returns show profitability, MDD reveals the resilience of a trading strategy during periods of market stress. A strategy with high returns but deep drawdowns can lead to emotional decision-making and potential financial ruin.

Recovery from drawdowns is also non-linear, adding to its importance. For instance, if your portfolio drops by 50%, you’ll need a 100% gain just to break even. This asymmetry underscores the need to minimize drawdowns in any trading system.

Below is a JavaScript function to calculate the Maximum Drawdown from an equity curve:

function calculateMaxDrawdown(equityCurve) {

let peak = equityCurve[0];

let maxDrawdown = 0;

for (let value of equityCurve) {

if (value > peak) {

peak = value;

}

const drawdown = (peak - value) / peak;

maxDrawdown = Math.max(maxDrawdown, drawdown);

}

return maxDrawdown;

}

// Example usage

const equityCurve = [100, 120, 90, 80, 110];

console.log('Maximum Drawdown:', calculateMaxDrawdown(equityCurve));

Value at Risk (VaR)

Value at Risk (VaR) is a widely used risk management metric that estimates the potential loss of a portfolio over a specified time period with a given confidence level. It helps quantify the risk exposure and prepare for adverse market movements.

1. Historical VaR

Historical VaR calculates the potential loss based on historical portfolio returns. By sorting past returns and selecting the worst losses at the desired confidence level (e.g., 5% for 95% confidence), traders can estimate the risk.

2. Parametric (Gaussian) VaR

Parametric VaR assumes portfolio returns follow a normal distribution. It uses the following formula:

VaR = Z * σ * √t

Where:

- Z is the Z-score corresponding to the confidence level (e.g., -1.645 for 95%)

- σ is the portfolio’s standard deviation

- t is the time horizon

3. Monte Carlo VaR

Monte Carlo VaR relies on generating thousands of random simulations of potential portfolio returns. By analyzing these simulations, traders can determine the worst-case losses at a specified confidence level. Although computationally intensive, this approach captures non-linear risks better than historical or parametric methods.

Below is a JavaScript example to calculate Historical VaR:

function calculateHistoricalVaR(returns, confidenceLevel) {

const sortedReturns = returns.sort((a, b) => a - b);

const index = Math.floor((1 - confidenceLevel) * sortedReturns.length);

return -sortedReturns[index];

}

// Example usage

const portfolioReturns = [-0.02, -0.01, 0.01, 0.02, -0.03, 0.03, -0.04];

const confidenceLevel = 0.95; // 95% confidence level

console.log('Historical VaR:', calculateHistoricalVaR(portfolioReturns, confidenceLevel));

Common confidence levels for VaR are 95% and 99%, representing the likelihood of loss not exceeding the calculated amount. For example, a 95% confidence level implies a 5% chance of exceeding the VaR estimate.

Trading Article: Stop-Loss Strategies and Portfolio-Level Risk

Stop-Loss Strategies

Stop-loss strategies are essential tools for managing risk and minimizing losses in trading. These predefined exit points help traders protect their capital and maintain emotional discipline. Here are some effective stop-loss methods:

- Fixed Percentage Stop: This approach involves setting a stop-loss at a specific percentage below the entry price. For example, a trader might choose a 2% stop, ensuring that no single trade loses more than 2% of its value.

- ATR-Based Stop: The Average True Range (ATR) is a volatility indicator that measures market fluctuations. Setting a stop-loss at 2x ATR below the entry price accounts for market noise while protecting against excessive losses.

- Trailing Stop Implementation: A trailing stop adjusts dynamically as the trade moves in the trader’s favor. This strategy locks in profits while minimizing downside risk, offering flexibility in rapidly changing markets.

- Time-Based Stop: This strategy exits a position after a predetermined period (e.g., N days) if the trade has not moved as expected. It helps prevent tying up capital in stagnant trades.

For traders looking to automate risk management, a JavaScript-based ATR stop-loss calculator can be useful. By inputting the ATR value, entry price, and position size, the calculator can determine the optimal stop-loss level. Such tools streamline decision-making and remove guesswork from the process.

Portfolio-Level Risk

Managing portfolio-level risk is just as critical as handling individual trade risk. A well-diversified, balanced portfolio can help mitigate losses and achieve long-term profitability. Consider the following factors when evaluating portfolio risk:

- Correlation Between Positions: Ensure that positions within your portfolio are not overly correlated. Highly correlated trades can amplify risk, as losses in one position may be mirrored across others.

- Maximum Correlated Exposure: Limit exposure to correlated assets to avoid excessive concentration risk. For instance, if two stocks tend to move together, allocate a smaller percentage to each rather than overloading the portfolio.

- Sector and Asset Class Diversification: Spread investments across different sectors, industries, and asset classes. Diversification reduces the impact of a downturn in any single sector or market.

- Portfolio Heat: This metric represents the total open risk across all positions in the portfolio. Monitoring portfolio heat ensures that cumulative risk remains within acceptable levels, avoiding overexposure.

- Risk Per Portfolio: A general rule of thumb is to never risk more than 6% of the total portfolio value at any given time. This ensures that even in a worst-case scenario, the portfolio remains intact.

By addressing these considerations, traders can build a resilient portfolio that balances risk and reward. Proper portfolio risk management is a cornerstone of successful trading, helping to weather market volatility and achieve consistent results over time.

Risk Management and Metrics

Risk-Adjusted Return Metrics

Understanding risk-adjusted return metrics is critical to evaluating the performance of an investment or portfolio. Below are three key metrics commonly used in risk management:

1. Sharpe Ratio

The Sharpe Ratio measures the return of an investment compared to its risk. It is calculated as:

Sharpe Ratio = (Rp - Rf) / σp

- Rp: Portfolio return

- Rf: Risk-free rate (e.g., Treasury bond rate)

- σp: Portfolio standard deviation (total risk)

2. Sortino Ratio

The Sortino Ratio refines the Sharpe Ratio by measuring only downside risk (negative returns). It is calculated as:

Sortino Ratio = (Rp - Rf) / σd

- Rp: Portfolio return

- Rf: Risk-free rate

- σd: Downside deviation (standard deviation of negative returns)

3. Calmar Ratio

The Calmar Ratio evaluates performance by comparing the compound annual growth rate (CAGR) to the maximum drawdown of an investment. It is calculated as:

Calmar Ratio = CAGR / Max Drawdown

- CAGR: Compound annual growth rate

- Max Drawdown: Maximum observed loss from peak to trough of the portfolio

JavaScript Function to Calculate Sharpe Ratio

function calculateSharpeRatio(portfolioReturn, riskFreeRate, standardDeviation) {

return (portfolioReturn - riskFreeRate) / standardDeviation;

}

Risk Management Checklist

Implementing a robust risk management process can help prevent significant losses and improve decision-making. Use the following checklist before trading and at the portfolio level:

- Set a clear risk-reward ratio for each trade.

- Define position sizing and ensure it aligns with your risk tolerance.

- Use stop-loss and take-profit orders to manage downside and capture gains.

- Regularly review portfolio exposure to avoid over-concentration in a single asset or sector.

- Monitor volatility and adjust positions accordingly.

- Evaluate correlations between portfolio assets to diversify effectively.

- Keep sufficient cash reserves to manage liquidity risk.

- Backtest strategies to evaluate performance under historical market conditions.

- Stay updated on macroeconomic factors and market news.

- Conduct regular stress tests to simulate worst-case scenarios.

FAQ

1. What is the importance of risk-adjusted return metrics?

Risk-adjusted return metrics help investors evaluate how much return is generated for each unit of risk taken, enabling better decision-making.

2. How do I choose between the Sharpe Ratio and Sortino Ratio?

The Sortino Ratio is more appropriate when you want to focus on downside risk only, while the Sharpe Ratio considers both upside and downside volatility.

3. What is maximum drawdown and why is it critical?

Maximum drawdown measures the largest percentage drop from a peak to a trough in portfolio value. It highlights the worst loss an investor could face.

4. When should I rebalance my portfolio?

Rebalance your portfolio periodically (e.g., quarterly) or when asset allocations deviate significantly from your initial targets.

5. Can I use these metrics for individual stocks?

Yes, these metrics can be applied to individual stocks, but they are more effective when used to evaluate overall portfolio performance.

Conclusion

Effective risk management is the cornerstone of successful investing. By using metrics like the Sharpe Ratio, Sortino Ratio, and Calmar Ratio, traders can make informed decisions about risk and return. The accompanying checklist ensures a systematic approach to managing risk at both the trade and portfolio levels.

Adopting an engineering mindset toward risk management—focusing on metrics, processes, and continuous improvement—can help investors navigate market complexities and achieve long-term success. Remember, risk is inevitable, but how you manage it determines your outcomes.

🛠 Recommended Resources:

Books and tools for quantitative risk management:

📋 Disclosure: Some links in this article are affiliate links. If you purchase through these links, I earn a small commission at no extra cost to you. I only recommend products I have personally used or thoroughly evaluated.

📚 Related Articles